While chatbots aren’t new, the insights world is just starting to wake up to their potential. Mike Stevens of Insight Platforms recently revealed that more research vendors are now offering chatbot-enabled solutions that promise to make it easy and more seamless to engage customers and get insights faster than ever before. Market research, it seems, is on the cusp of entering the chatbot era.

That said, when new research trends emerge, it’s critical that we take a step back and understand their impact not just to data quality but also to the experience of respondents. In my opinion, any new technology that does a fine job of getting qualitative and quantitative data but fails to deliver a good user experience is a non-starter. A good experience means people are more likely to come back and continue to provide their honest feedback. On the other hand, a subpar experience hurts the reputation of the market research department and diminishes the overall brand of the company.

Given that more insight teams are now exploring the use of chatbots for research, the time is right to put this tech under the microscope. At Rival Technologies, a core product in our platform is what we call chats—chatbot-enabled surveys hosted through messaging apps (like SMS and Facebook Messenger) and web browsers. My role as a Senior Methodologist in our company is to test our own solutions and make sure that they are up to snuff, so I’ve had the opportunity to get my hands dirty with this new technology.

Recently I ran a study to examine the impact of chat surveys on the respondent experience. The study also looked at whether the data and responses you get from chats are significantly different from traditional online surveys, and what impact, if any, do chats have on the demographic composition of your sample.

Why messaging platforms?

Before I dive in and share results from our study, I’d like to address one potential question from veteran market researchers: why send surveys via messaging platforms?

The answer can be boiled down into two words: email overload. Respondents are getting so much emails (not just from researchers, but also from marketers) and are ignoring survey invites as a result. A 2017 study found that 74% of consumers are overwhelmed by emails. More recently, Adweek revealed that more than 50% of emails are deleted without ever being read.

This may sound dramatic, but I do think that finding other ways of reaching respondents other than email surveys is critical to the industry’s future. Messaging and SMS have become popular ways for people to communicate with friends and family, so these are channels that we need to figure out as an industry.

A parallel study comparing chats with traditional online surveys

To compare the chat experience versus the traditional survey experience, we did a multi-stage project in July 2018. We used the same set of questions for both chats and traditional surveys—the topic was people’s attitudes on and usage of sunscreen. This theme was chosen as it was relevant to most people; it was also a topic that a company might explore in a real study.

The sample for both surveys also came from the same source: InnovateMR’s panel and river sample. At the end of the surveys, we asked people about their experience.

We engaged thousands of consumers and uncovered insights on the real benefits of chats as a potential alternative to the traditional online survey. Here are some key takeaways from this research-on-research.

Takeaway 1: Chats deliver a more enjoyable respondent experience.

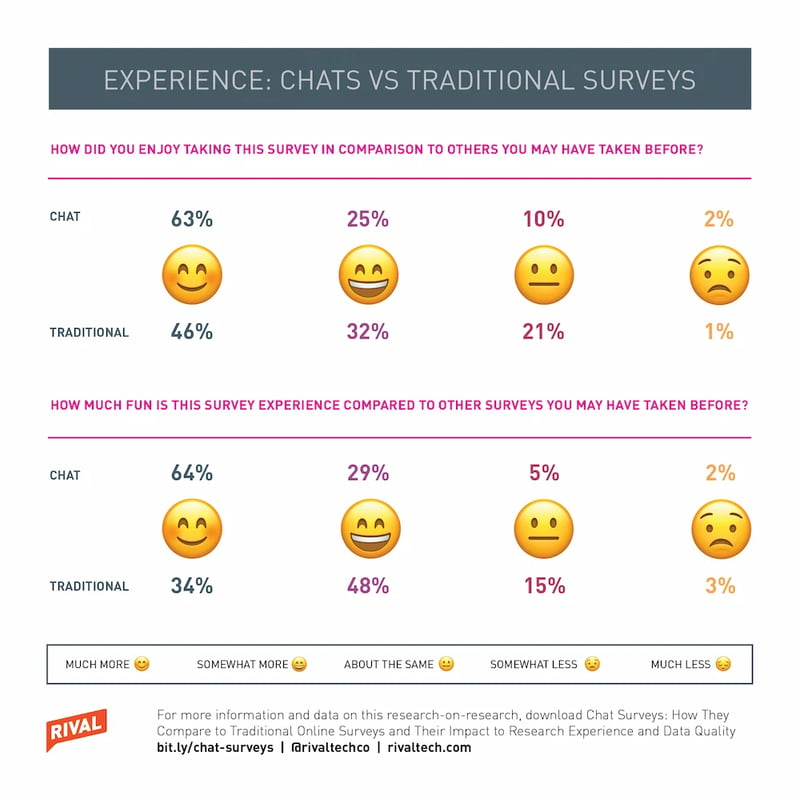

The study asked respondents about four different aspects of their experience: enjoyment, fun, ease and length.

Regarding the first two, the data is very clear: people like the experience with chats much more than they do with traditional online surveys. An overwhelming 88% of participants told us they found the chat experience either “much more” or “somewhat more” enjoyable than other surveys they’ve taken in the past. On the other hand, only 68% of those who took the traditional survey agreed to the same same statement. (It’s worth noting that for the traditional survey, we applied survey design best practices.)

So you might be wondering why people love chats. Open-ended responses revealed that respondents liked that chats felt more like a conversation rather than a survey. In my opinion, the fact that chats allow a back-and-forth with the respondent and makes it easy to use emojis, GIFs and videos also contributes to the good feedback we received about the experience.

Takeaway 2: People find chats easier to complete

The chat interface and experience are familiar to respondents since chats use the same channels people use to message their friends and family. But as a channel for sending out surveys, chats are new. So I was curious if research participants found the new experience difficult.

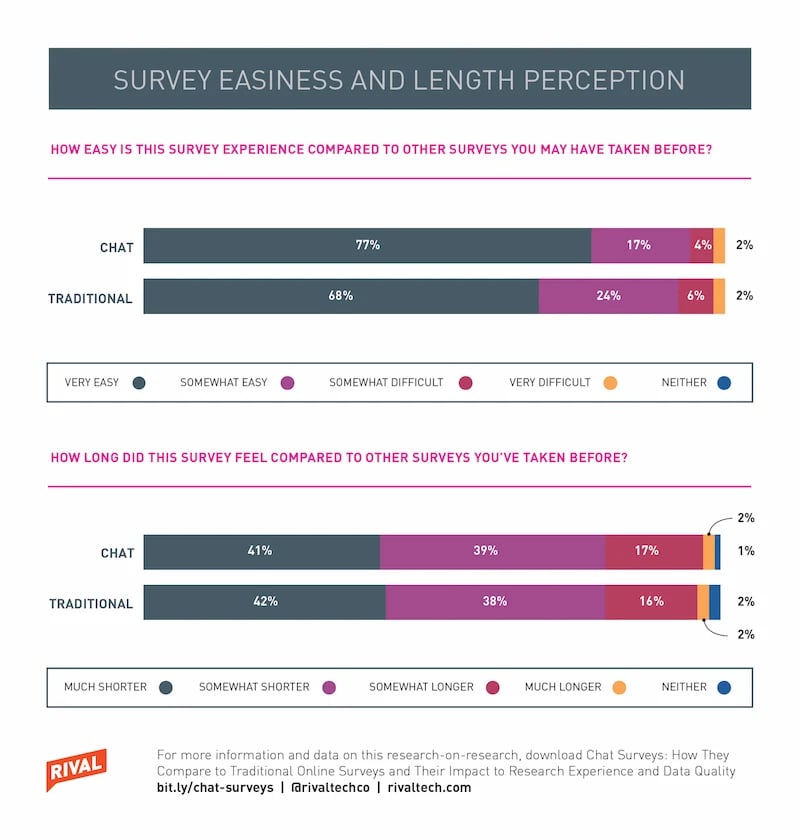

The data shows chats are seen as at least as easy or slightly easier to complete as a traditional survey.

We also looked into survey length. This is an important part of the experience because if the activity is too long, it could result to frustration on the part of the participants.

Our data shows people who took the traditional survey and those who did the chats had similar perception of the length of the activities. This isn’t surprising since we used the same number of questions for both activities. It is, however, worth noting that for the traditional survey, we asked fewer questions than most surveys would.

Takeaway 3: Chats do not introduce demographic skews

I was pleased to see that people love chats, but as a veteran market researcher, I wanted to know if this new way of engaging consumers attracted certain demographics more than others.

Chats lend themselves well to distribution via SMS and messaging platforms—channels that are very popular with younger consumers. So while it makes logical sense that a younger demographic would find chats appealing, some researchers might wonder if this new way of engaging consumers would alienate other demographics.

Our finding: No skew was introduced into the data as a result of sending panel sample to a chat as opposed to a traditional survey. For one, there’s no real significant differences between the two groups in terms of age distribution. There is a small increase among younger (under 44) consumers in the chat data but nothing that would suggest that an older generation was put off by the new survey method.

Gender, area (rural, suburban and urban), education and race distribution were also comparable in the two groups. You can download the full report if you’d like to see all the charts.

No big differences in data either

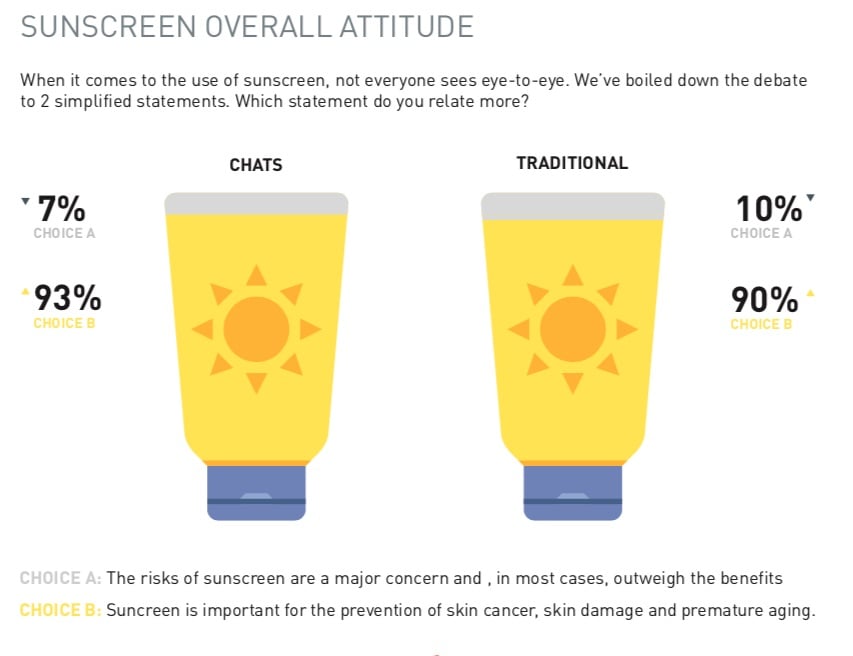

So, you might be wondering about the actual results of our chats and surveys. (As a reminder, our questions were about sunscreen usage and attitudes.) Did the “chat people” have a different view from those who took the traditional survey?

Not really. The results from the two groups were actually very similar. The same conclusions can be drawn from the two data sets. Here’s an example:

This isn’t super surprising. After all, research participants for this study came from the same sample and, as we discussed earlier, had similar demographic composition. The chat itself does not introduce any skew when questions are the same and the sample source is the same.

More research-on-research on the way

We’re in the super early days of chats, and my intention is to continue to test this innovation as the technology evolves. In particular, keeping an eye on respondent experience is a big priority for me and my team.

No Comments Yet

Let us know what you think